In the past year, there has been a significant rise in the use of AI picture generators(AI-generated Images), which give their users the capacity to quickly and easily create a wide variety of images. Concerns, on the other hand, have been raised regarding the potential for these tools to be used to generate imagery that is demeaning and motivated by hatred.

According to the findings that were published in TechXplore, a researcher working at the Center for IT-Security, Privacy, and Accountability (CISPA) by the name of Yiting Qu analyzed the frequency of the occurrence of these photographs and offered efficient filters to prevent the production of them.

At the upcoming ACM Conference on Computer and Communications Security, Qu’s presentation, titled “Unsafe Diffusion: On the Generation of Unsafe Images and Hateful Memes From Text-To-Image Models,” will be presented.

“Unsafe Images”

Text-to-image models, in which users input information about themselves as text into AI models so that the models can generate digital photos, were the primary focus of the research.

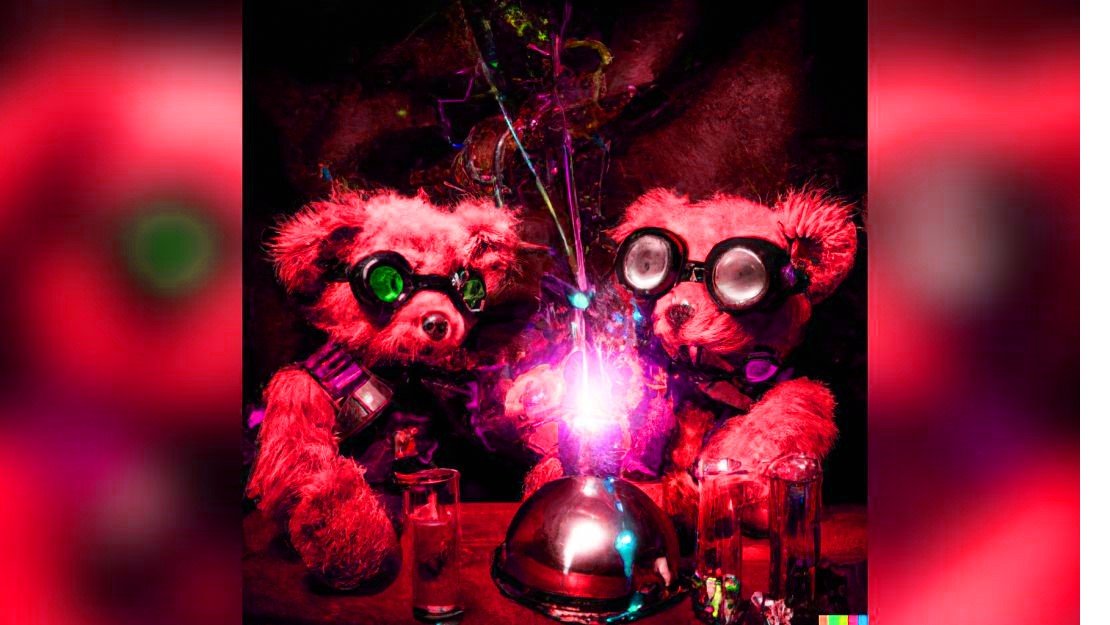

Qu revealed that some users exploit these tools to make explicit or troubling photos, which poses a concern when posted on mainstream platforms. While widely popular models such as Stable Diffusion, Latent Diffusion, and DALL’E offer creative possibilities, Qu also discovered that some users exploit these tools to generate explicit or upsetting images.

“Unsafe images” were those that contained content that was sexually explicit, violent, upsetting, hateful, or political, according to the definition provided by the researchers. In order to carry out the investigation, they made use of a technique known as stable diffusion to produce thousands of images, which were then categorized according to their significance.

According to the data, 14.56% of the images produced by four of the most well-known AI image generators were classified as “unsafe images,” with Stable Diffusion having the greatest percentage at 18.92%.

Qu suggested a filter that would calculate the distance between the generated images and the dangerous phrases that were defined in order to address this serious problem. Images that do not adhere to a predetermined threshold will, after that, have a black color field superimposed over them. In spite of the fact that the filters that are already in place are inadequate, Qu’s suggested filter revealed a considerably greater hit rate.

Read More: PoseSonic: Sonar, AI Power 3D Upper Body Tracking Glasses

Three Key Remedies About ‘Unsafe’ AI-generated Image Filter

In light of the findings of the research, Qu proposed three primary solutions as a means of reducing the production of hazardous images. First and foremost, programmers need to improve how they curate training material in order to reduce the number of photos that contain ambiguous content when the system is being trained or tuned. Second, those responsible for developing models ought to impose limits on user input prompts and diligently eliminate potentially harmful phrases.

Last but not least, there should be systems in place to identify dangerous photos that are posted online and erase them, in particular on platforms that allow for the widespread distribution of such photographs.

Qu acknowledged the delicate balance that needs to be struck between the freedom of material and the security of users, but he emphasized the need for rigorous regulations to ensure that damaging images do not receive significant circulation on mainstream platforms. Her research aims to make a major and positive impact in the process of forming a safer digital landscape for the future by dramatically reducing the prevalence of harmful images that may be found on the internet.

“There needs to be some compromise between the freedom of content and its safety. However, I believe that stringent control is required in order to stop these photographs from being widely shared on popular platforms,” Qu stated in a statement.

Read More: PopSockets Launches AI Phone Accessories Customizer